Kernels Without Abstractions

A History and Motivation

Throughout the last few decades, we have seen a boom in technology both in the power of the machinery we can produce but also in the socialization and usage of those machines. We have built extensive networks through both wires and society that have greatly augmented our lives. Veritably, we have become dependent on computers.

However, as we continue to grow and push technology to its limits, we must always be willing to take a moment and look back to the center. All code, and thus all networks and all protocols, once executed has an origin-- an arbiter-- a piece of hardware and software that is underneath it. It is through this hardware/software layer that all code must navigate, understand, and in a very true sense of the word, negotiate in order to run.

This layer has been referred to as the operating system, which by a broad definition is the software that manages system resources on the behalf of applications. Although most of the public may know or acknowledge this program as being the ultimate authority of a machine, the operating system itself has its own layers, abstractions and methods of isolation. The most important layer is also the core component of the operating system: the kernel.

The operating system is the component primarily involved in connecting humans to hardware.

By being the core component, any decisions made in the kernel are essentially made for the application programmer and cannot be easily thwarted, changed, influenced, avoided, or destroyed. Because the decisions made at this layer will reflect the time in which it was written, to understand the kernel design, one is obligated to view the software alongside its history.

The fact of the matter here, and alarmingly so, is that the kernel design we make use of nearly every day without protest is very outdated. It reflects a series of decisions that in turn reflect a time long passed, especially considering the speed technology develops. It is important to ask not only what types of designs are better, but why outdated designs persist and how designs affect people.

To answer both of those questions, we have to look back.

UNIX

The kernels that are most prevalent today can all date their origins to one system: UNIX. Written in 1969 at AT&T's Bell Labs, it quickly became the most popular system for new machines. The design was motivated due to a lack of standardization of hardware in that era. That is, there was a need for an operating system that could be easily ported to new machines and could easily communicate with heterogeneous machines.

To do this, Bell Labs designed a programming language called C that could take a high level description of a normally low-level concept and could compile this on a variety of hardware architectures. They then wrote UNIX using C and published a specification that defines the system's behavior. 1

Dennis Ritchie and Ken Thompson were the instrumental designers of UNIX

UNIX became widely successful through this standardization that was necessary at the time. This allowed stricter agencies such as governments and corporations to make use of it. Overall, such a process allowed hardware to compete more freely, albeit crippling the competition of software. Nevertheless, the managing group for UNIX called it a "critical enabler for a revolution in information technology." 2

Another measure of its success is how it inadvertently ushered the way for the first open source movement. Although designed and implemented by Bell Labs, the parent company, AT&T, was restricted by law known as the "1956 Consent Decree" due to anti-trust violations; they could never sell a product unrelated to telephony. Software fell under that rule, so they were forced to give away the source and the system itself. 3 As an aside, this same decree put the transistor, the electrical component that is essential to all modern computing, in the public domain as well.

Linux

UNIX prospered for over two decades without any direct competition. However, in the early 1990s, Linux was produced, from scratch, as a UNIX replacement. Linux would over the next decade surge in popularity in many of same contexts as UNIX. Why would a standardized operating system suddenly see competition and motivation to replace it with a mostly identical copy?

The motivation was never a technical one. In 1983, the US government broke up AT&T into regional Bell companies. In return, the prosecutors revoked the 1956 decree. This allowed the smaller, yet just as organized AT&T to finally turn UNIX into a product. 4

Overnight, an environment that was fueled by open source and free availability was reduced to commercial enterprise. UNIXes must be licensed. UNIX must be bought. Thousands of tools designed on the UNIX that promised to work on a multitude of hardware could no longer be used without licensing the UNIX operating system.

To counter this increasingly antagonistic environment, the second open source movement spawned almost simultaneously from multiple, independent groups: GNU and BSD. These groups established a set of legal principles that would be attached to their code to ensure that such a condition would not arise again.

Thus, the motivation to produce a new UNIX was societal. In order to maintain the open tools already widely used, people had to create a substitute that would stand the test of time. Linux, although originally just a hobby project by Linus Torvalds, became the kernel for this new open-system coupled with GNU, the operating system.

The UNIX Abstraction

Through this timeline, we can see why UNIX was so successful. This system masked the difficulties of the time: unstandardized hardware within an emerging competitive hardware space. The resulting set of tools that only fit one system design led to Linux's similar success in the modern era as it was essential to have a replacement system.

However, we should now look at how well the decisions of UNIX, made over 40 years ago, work today. The UNIX design plays off of a core philosophy: that everything in the system be modeled by a unified abstraction called the file. Every component of the system with particular behaviors (read, write, seek, etc) would be reflected as the same type of object including networks, IPC, keyboard input, program output, process metadata, hardware information, and, most obviously, files from disk.

The merit of such an abstraction is substantial. Programs could be written to merely expect and handle such an object. It just reads and writes freely knowing that certain behaviors will occur predictably regardless of how the operating system is actually performing the operations behind the scenes. Now, you can write, for example, one small application to hash from a data stream in, say, a variant of SHA2. This one program can hash from a network, from keyboard input, from disk-- all without changes in its code. You simply supply it a "file."

This reduces program complexity noticeably without adding considerable user

complexity. The shell, a program that provides the human interface

between person and machine, offers to ability to pipe, that is direct output

from one program into the input of another. It does this by adding the operation

as a single character: | the pipe operator. For instance,

cat music.mp3 | mplayer will play the music file as a stream to mplayer. One

could just as easily piped a program to pull that stream from a website or have

a program randomly generate input

without requiring changes to the mplayer application.

The UNIX philosophy conveys this idea in a set of tenets: "Make each program do one thing well" and "Store data in flat text files." 5 Essentially, programs should only perform one action-- hashing in our example. And since one doesn't know what might read them, such streams should be as simple as possible: text since a human or simple tool may need to read them.

Monolithic Kernel

However, the benefits aside, there is still the question about where such an abstraction should go. The UNIX model, which Linux emulates, is to place the abstractions alongside the interfaces and hardware. A unified abstraction means that the systems that are all tied together are all visible at the point of the abstraction. This is known as the monolithic model and very easily argued as the most naive type of kernel design. It is also a design used in Microsoft Windows (NT Kernel) and Apple's OS X (Darwin/XNU), albeit both are converted microkernels.

One reason for this design is that this more naive approach to isolation (none) is simply easier to develop. UNIX was also developed when such ideas for systems were immature and too risky to implement. Over the last 40 years, however, many techniques have emerged that help designers manage system complexity in a way that would avoid extra development effort.

Another is the misconception that monolithic kernels offer better performance. I have already co-written an essay about the error of this assumption. In terms of system design, performance is neither a metric for comparison nor gained through the combined elimination of isolation and addition of strict, broad abstraction. It is honestly rather strange why systems developers and researchers adhere so strongly to such an obviously flawed understanding.

Some researchers have written essays discussing what can be read as a frustration with this policy of comparing systems by their performance. Dawson Engler, co-designer of Exokernel, examines how the elimination of abstractions in the kernel and pushing them out to a more diverse and complicated application space is equal in performance. 6 James Larkey-Lahet, co-designer of XOmB, gives an informal illustration that the simplicity of low-level interfaces is a flexibility that can build higher level components without loss. The existence of such systems also shows that the monolithic argument is not a proven condition. It is a hypothesis growing frail-- persisting simply by faith and fueled by an aging pride.

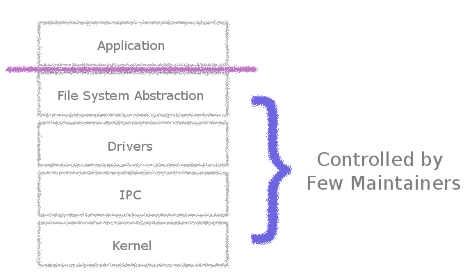

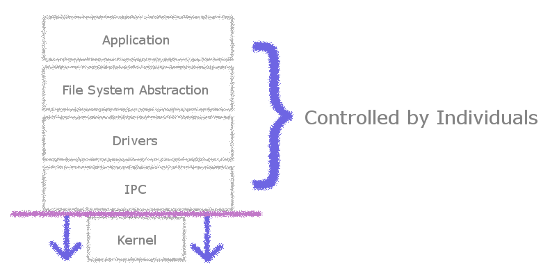

The elements of a monolithic kernel are controlled by a small group.

Another, and perhaps most important, condemnation of the monolithic design is its reflection of societal structure. Such a broad abstraction places a line of control and influence between application developers and kernel developers. Application developers are placated by the abstraction and are dependent on kernel developers to add special cases to gain the promised performance behind this line. Kernel developers become maintainers for the arbitration of system resources, and thus become arbiters themselves on how hardware can be utilized.

When application developers attempt to cross this line themselves, they are generally met with aggression by a small group of maintainers. In Windows, such an inspection is not possible due to the protection and hiding of the source code. However, Linux does not offer a great alternative regardless of its open source status. One can see the source code and even discover improvements, but the ability to have the change impact the greater whole requires the approval of Linus Torvalds. He represents a societal single-point-of-failure. He insists on verbal harassment of people sending code he does not like. He berates people who use applications and tools that he does not like. He berates his own kernel developers for not reviewing code the exact manner he does.

Linus Torvalds, creator of Linux, holds control over decisions made in the kernel.

I will not accept any notion that Linus does this to ensure quality, or discourage bad code, or in any way increases the quality of Linux by acting this way. That is a fallacy, yes, and a double standard as my friend Tim points out with the founder of GNU commenting in approval, but ultimately his behavior is also irrelevant. It is pointing out that we will go out of our way to placate behavior of a single person because we somehow naively believe that one person can single-handedly control the quality of a system that has over 15 million lines of code and growing exponentially. Put more generally, monolithic design lends itself to hierarchical authority where decisions important to most are made by so very few.

Microkernel

There are a few alternative designs that can alleviate these social and technical concerns without loss of performance. They are all motivated to remove elements of control from centralized, monolithic systems. If monolithic designs are improper because components have too much influence on other, unrelated parts of the system, then one idea is to separate those concerns.

One such system is the microkernel which employs the idea of principle of least privilege, widely considered an essential principle of secure systems. 7 Each component of the system will run in its own, detached environment, but still have full privileges over whatever hardware or resources it needs to work. For example, network access would be provided not by some section of a large, rigid monolith, but rather by a single entity devoted to the task. This program is only responsible for handling network access on behalf of applications and cannot access or manipulate any other part of the system.

The central component, the kernel itself, only needed to facilitate very low-level access and communication between applications and these specialized components. With a small kernel, the system could be made far more reliable. For instance, such kernels were so unspecialized that it has been possible to formally verify their correctness, such as the seL4 system. 10

In fact, the initial motivation for such a design over a monolithic kernel was for reliability. If one section of the system failed or was incorrect, it could not affect certain parts of the system. In fact, it could be restarted without having to consider the ramifications to other systems components. With this in mind, the principle of least privilege was eventually considered essential for providing fault tolerance. 8

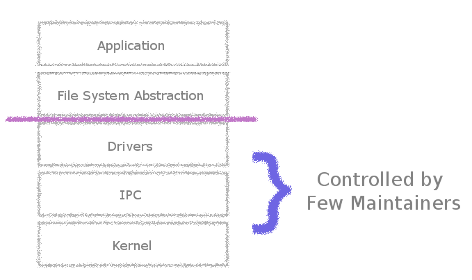

The microkernel pushes more control to individual developers.

Yet, we must also point out the social effect of the microkernel's approach. It effectively limited authority and distributed control. And just as the monolithic design reflected its social surroundings, this design suggests that authority and influence over a microkernel system would also contained within more limited authority and benefit from a wider audience of programmers and designers.

However, microkernels have been around for decades, but not completely replaced monolithic models in all situations. They have seen past success on smaller devices, such as cellular phones since reliability is a concern there. Yet, smartphones have skewed the systems usage more toward monolithic designs once more.

There is no valid argument as to why microkernels have failed to such the degree that they have. Now that the embedded space is falling to monolithic designs, we are seeing a world where there are only three major system designs in use altogether: Windows, iOS, and Linux.

However, if we were to design new systems that will give us both technical efficiency and social influence and control, we should go even further than the microkernel. We require more than just reliability. We must ensure the most elimination of authority and the highest ability for the individual to control the system.

Exokernel

Most recently, a new class of system has emerged. The exokernel is a range of designs that attempt to minimize the kernel to simply a security agent. At its most narrow definition, an exokernel just ensures that any hardware that is asked for by an application is received as long as it is deemed secure to do so.

Under that definition, the exokernel will never impose any restriction or rule on how that hardware is used. For instance, a microkernel has system components that, although isolated from the kernel, still impose a set of rules and arbitrate access. With an exokernel, an application could use such a technique, or it could access the hardware directly. This flexibility allows the exokernel to be the root of any type of system as defined by application developers.

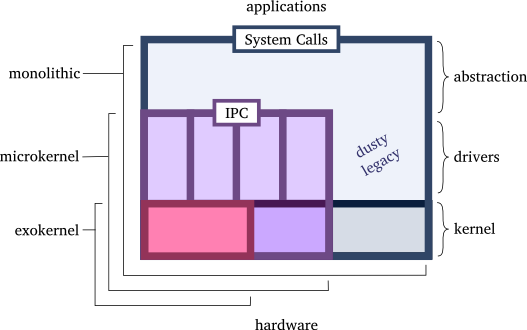

Therefore, the exokernel should not be seen as a separate design, but the reduction of all designs and efforts that preceded it. A microkernel is an exokernel where isolated applications take hardware for their own and force other applications to communicate with it in order to use it. A monolithic kernel can be seen as a large system that must have somewhere deep inside it the pieces of an exokernel.

The exokernel is the smallest design of the three and provides the most flexibility.

In terms of reliablity, the exokernel not only provides the microkernel's principle of least privilege, but also the principle of least common mechanism. This states that a common, widely shared component of a system is more likely to widely compromise security. 7 The exokernel increases its reliability over a microkernel by allowing systems components to be diverse since application developers can patch or even replace system components with their own implementations.

In terms of performance, the exokernel has been shown to be better than stock monolithic kernels. For instance, the Xok exokernel asks the question "How can you serve files from disk over a network without copying the information?" Such a task is extremely common on the internet, yet it is impossible on conventional monolithic kernels. However, with the flexibility of the exokernel, an application developer could take the disk driver library and store the file to disk already separated into packets, which are the bite-sized pieces of the information preferred for transmitting over a network. These packets are, of course, not filled in completely. This is like taking something large and dividing it up into many boxes with blank posting labels to make shipping easier. With this representation, the application could then simply read these packets off of disk into a buffer, set the empty fields in the packets, and have a network driver library send them off.

This eliminates all copying of memory. It is simply read from disk with a DMA transfer (fast) and sent. Xok developed the Cheetah webserver with this technique. The development of this application required no changes in the underlying kernel and resulted in an increase in performance by 400-800% over abstractions available to typical monolithic kernels. 9

Of course, monolithic kernels could just add a separate and specialized path to perform the same optimization. In conversation with Greg Ganger, who worked on Cheetah, he suggested that a corporation had indeed done this and got the same performance result. The idea, however, is not that an exokernel allows a piece of software to do this, but rather it gives any application developer the ability to do so.

The elements of an exokernel are controlled by any application developer.

That brings us to the most important point, yet again: the exokernel provides a better social platform for systems. In this design, the kernel has been reduced to its smallest size. Applications do not require the kernel's permission to directly access hardware. Application developers can completely rewrite drivers if they so wish. That is, they have complete influence over the system and thus have complete control over their own hardware.

Interface over Abstraction

I posit that the most important issue for systems design is to reduce the amount of effort it takes an individual to subvert that system to their own will. With that in mind, the traditional monolithic model is the least ideal. It restricts control and gives most of its authority to the kernel developers who understand the underlying system the most.

The microkernel and then exokernel show us that the technical merits of the monolithic approach are illusory. One can design a system where control and authority can be granted to individuals through their applications and still provide a very extensible, flexible and efficient system. In fact, exokernel systems are shown to more easily provide greater efficiency than monolithic designs.

Therefore, we must reject the technical argument in our inspection as to why these monolithic systems persist. We must look at how society affects the technical design of our systems. For instance, the development ease of implementing monolithic systems and the unjustified societal impetus for authority over centralized systems. And from this new perspective, we must come to a stronger consensus as to why certain systems designs fail.

Futhermore, what we can take away from this discussion toward the creation of new systems is that it is becoming clearer that minimal interfaces are superior to unified abstractions. An interface being a thin layer that describes exactly what occurs on the other side, whereas an abstraction is a description of behavior that is guaranteed by the system by any means. Interfaces must exist, yet abstractions can be rebuilt upon any interface or abandoned completely.

For example, one can always build the UNIX abstraction on an exokernel. If you prefer files or wish to keep the ecosystem of tools we have going without changing their source code, then you can. You can always create that same abstraction with a minimal interface around hardware. Trivially: UNIX itself. Remember that the exokernel does not impose any rules upon application space. However, such an abstraction being the system could be problematic. By not having this abstraction known to a kernel, or even the system at large, an application can choose to ignore it when it is necessary to do so.

This suggests that the individual can ignore system developers whenever they wish to do so. No longer can one small group decide a scheduling policy, or how to buffer a network socket to fit file access abstractions, or the manner in which one speaks to hardware. The power over hardware is directly in the hands of those that hold it.

References

- Online, pdf. The Single UNIX Specification, Version 3. The Open Group. May 2003.

- Online. The UNIX Operating System: Mature, Standarized and State-of-the-Art. The UNIX System Cooperative Promotion Group.

- United States v. Western Electric Co.. 1956 Trade Cas. (CCH) ¶ 68,246 (D.N.J. 1956)

- United States v. AT&T, 552 F. Supp. 131 (D.D.C. 1982), aff'd sub nom. Maryland v. United States, 460 U.S. 1001 (1983)

- The Unix Philosophy. Mike Gancarz. ISBN 1555581234. December 1994.

- Online, pdf. Exterminate all operating system abstractions. Dawson R. Engler, M. Frans Kaashoek. 1995.

- Online, pdf. The Protection of Information in Computer Systems. Jerome H. Saltzer and Michael D. Schroeder. 1975.

- Online, pdf, self-hosted. Fault Tolerant Operating Systems. Peter Denning. August 1976.

- Online, html. Application Performance and Flexibility on Exokernel Systems. M. Frans Kaashoek, Dawson R. Engler, Gregory R. Ganger, Héctor M. Briceño, Russell Hunt, David Mazières, Thomas Pinckney, Robert Grimm, John Jannotti, and Kenneth Mackenzie. 1997.

- Online, html. Secure Microkernel Project (seL4). UNSW. 2008.

Comments

If you'd like to comment, just send me an email at wilkie@xomb.org or on either Twitter or via my Mastodon profile. I would love to hear from you! Any opinions, criticism, etc are welcome.

Donations

If you'd like to make a donation, I don't know what is best for that. Let me know.

Copyright

All content off of this domain, unless otherwise noted or linked from a different domain, is licensed as CC0